The present document collects information on glass-type AR/MR devices in the context of 5G radio and network services. The primary scope of this Technical Report is the documentation of the following aspects:

- Providing formal definitions for the functional structures of AR glasses, including their capabilities and constraints

- Documenting core use cases for AR services over 5G and defining relevant processing functions and reference architectures

- Identifying media exchange formats and profiles relevant to the core use cases

- Identifying necessary content delivery transport protocols and capability exchange mechanisms, as well as suitable 5G system functionalities (including device, edge, and network) and required QoS (including radio access and core network technologies)

- Identifying key performance indicators and quality of experience factors

- Identifying relevant radio and system parameters (required bitrates, latencies, loss rates, range, etc.) to support the identified AR use cases and the required QoE

- Providing a detailed overall power analysis for media AR related processing and communication

Glass-type AR/MR Device Functions

AR glasses contain various functions that are used to support a variety of different AR services as highlight by the different use cases.

The various functions that are essential for enabling AR glass-related services within an AR device functional structure include:

- Tracking and sensing

- Inside-out tracking for 6DoF user position

- Eye Tracking

- Hand Tracking

- Sensors

- Capturing

- Vision camera: capturing (in addition to tracking and sensing) of the user’s surroundings for vision related functions

- Media camera: capturing of scenes or objects for media data generation where required

NOTE: vision and media camera logical functions may be mapped to the same physical camera, or to separate cameras. Camera devices may also be attached to other device hardware (AR glasses or smartphone), or exist as a separate external device. - Microphones: capturing of audio sources including environmental audio sources as well as users’ voice.

- Basic AR functions

- 2D media encoders: encoders providing compressed versions of camera visual data, microphone audio data and/or other sensor data.

- 2D media decoders: media decoders to decode visual/audio 2D media to be rendered and presented

- Vision engine: engine which performs processing for AR related localisation, mapping, 6DoF pose generation, object detection etc., i.e. SLAM, objecting tracking, and media data objects. The main purpose of the vision engine is to “register” the device, i.e. the different sets of data from real and virtual world are transformed into the single world coordinate system.

- Pose corrector: function for pose correction that helps stabilise AR media. Typically, this is done by asynchronous time warping (ATW) or late stage reprojection (LSR).

- AR/MR functions

- Immersive media decoders: media decoders to decode compressed immersive media as inputs to the immersive media renderer. Immersive media decoders include both 2D and 3D visual/audio media decoder functionalities.

- Immersive media encoders: encoders providing compressed versions of visual/audio immersive media data.

- Compositor: compositing layers of images at different levels of depth for presentation

- Immersive media renderer: the generation of one (monoscopic displays) or two (stereoscopic displays) eye buffers from the visual content, typically using GPUs. Rendering operations may be different depending on the rendering pipeline of the media, and may include 2D or 3D visual/audio rendering, as well as pose correction functionalities.

- Immersive media reconstruction: process of capturing the shape and appearance of real objects. - Semantic perception: process of converting signals captured on the AR glass into semantical concept. Typically uses some sort of AI/ML. Examples include object recognition, object classification, etc.

- Tethering and network interfaces for AR/MR immersive content delivery

- The AR glasses may be tethered through non-5G connectivity (wired, WiFi)

- The AR glasses may be tethered through 5G connectivity

- The AR glasses may be tethered through different flavours for 5G connectivity

- Physical Rendering

- Display: Optical see-through displays allow the user to see the real world “directly” (through a set of optical elements though). AR displays add virtual content by adding additional light on top of the light coming in from the real-world.

- AR/MR Application

- An application that makes use of the AR and MR capabilities to provide a user experience.

Generic reference device functional structure device types

In TR 26.928 clause 4.8, different AR and VR device types had been introduced. This clause provides an update and refinement in particular for AR devices. The focus in this clause mostly on functional components and not on physical implementation of the glass/HMD. Also, in the context the device is viewed as a UE, i.e. which functions are included in the UE.

A summary of the different device types is provided in Table 4.1. The table also covers:

- how the devices are connected to get access to information

- where the 5G Uu modem is expected to be placed

- where the basic AR functions are placed

- where the AR/MR functions are placed

- where the AR/MR application is running

- where the power supply/battery is placed.

In all glass device types, the sensors, cameras and microphones are on the device.

The definition for Split AR/MR in Table 4.1 is as follows:

- Split: the tethered device or external entity (cloud/edge) does some pre-processing (e.g, a pre-rendering of the viewport based on sensor and pose information), and the AR/MR device and/or tethered device performs a rendering considering the latest sensor information (e.g. applying pose correction). Different degrees of split exist, between different devices and entities. Similarly, vision engine functionalities and other AR/MR functions (such as AR/MR media reconstruction, encoding and decoding) can be subject to split computation.

| Device Type Name | Reference | Tethering | 5G Uu Modem | Basic AR Functions | AR/MR Functions | AR/MR Application | Power Supply |

|---|---|---|---|---|---|---|---|

| 5G Standalone AR UE | 1: STAR | N/A | Device | Device | Device/Split1) | Device | Device |

| 5G EDGe-Dependent AR UE | 2: EDGAR | N/A | Device | Device | Split1) | Cloud/Edge | Device |

| 5G WireLess Tethered AR UE | 3: WLAR | 802.11ad, 5G sidelink, etc. | Tethered device (phone/puck) | Device | Split2) | Tethered device | Device |

| 5G Wired Tethered AR UE3) | 4: WTAR | USB-C | Tethered device (phone/puck) | Tethered device | Split2) | Tethered device | Tethered device |

| 1) Cloud/Edge 2) Phone/Puck and/or Cloud/Edge 3) Not considered in this document | |||||||

The Wired Tethered STAR Glass device type is for reference purposes only and not considered in this document as it is not included as part of the study item objectives.

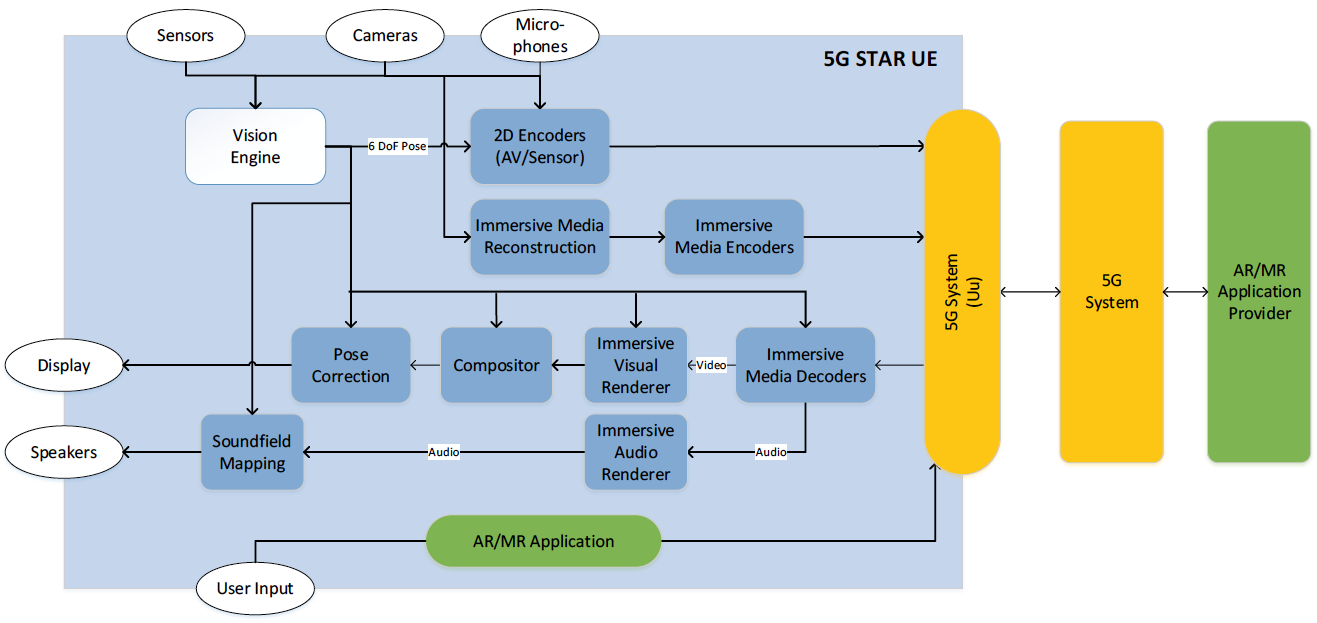

Type 1: 5G STandalone AR (STAR) UE

Functional structure for Type 1: 5G STandalone AR (STAR) UE

Functional structure for Type 1: 5G STandalone AR (STAR) UEMain characteristics of Type 1: 5G STandalone AR (STAR) UE:

- As a standalone device, 5G connectivity is provided through an embedded 5G modem

- User control is local and is obtained from sensors, audio inputs or video inputs

- AR/MR functions are either on the AR/MR device, or split

- The AR/MR application is resident on the device

- Due to the amount of processing required, such devices are likely to require a higher power consumption in comparison to the other device types.

- Functionality is more important than design

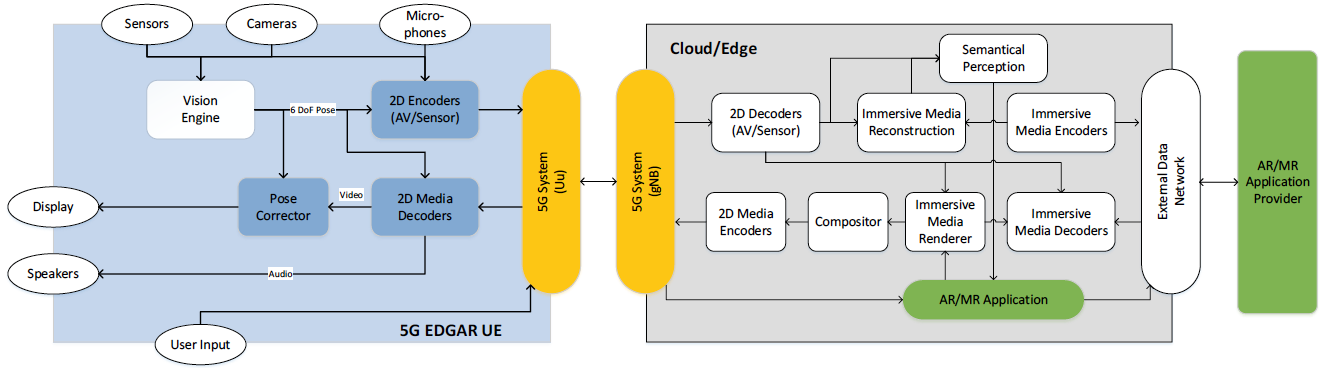

Type 2: 5G EDGe-Dependent AR (EDGAR) UE

Functional structure for Type 2: EDGAR UE

Functional structure for Type 2: EDGAR UEMain characteristics of Type 2: 5G EDGe-Dependent AR (EDGAR) UE

- As a standalone device, 5G connectivity is provided through an embedded 5G modem

- User control is local and is obtained from sensors, audio inputs or video inputs.

- Media processing is local, the device needs to embed all media codecs required for decoding pre-rendered viewports

- The basic AR Functions are local to the AR/MR device, and the AR/MR functions are on the 5G cloud/edge

- The AR/MR application resides on the cloud/edge.

- Power consumption on such glasses must be low enough to fit the form factors. Heat dissipation is essential.

- Design is typically more important than functionality.

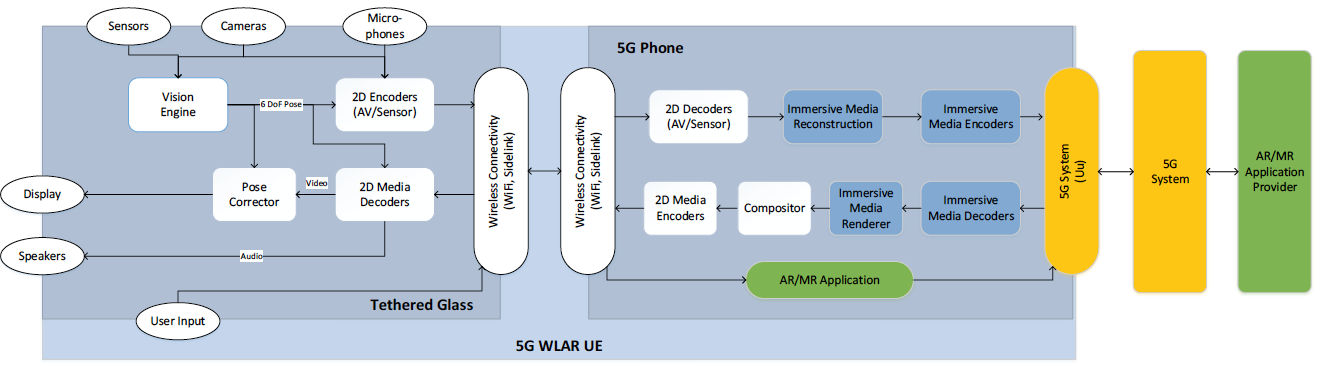

Type 3: 5G WireLess Tethered AR (WLAR) UE

Functional structure for Type-3: 5G WireLess Tethered AR (WLAR) UE

Functional structure for Type-3: 5G WireLess Tethered AR (WLAR) UEMain characteristics of Type 3: 5G WireLess Tethered AR UE:

- 5G connectivity is provided through a tethered device which embeds the 5G modem. Wireless tethered connectivity is through WiFi or 5G sidelink. BLE (Bluetooth Low Energy) connectivity may be used for audio.

- User control is mostly provided locally to the AR/MR device; some remote user interactions may be initiated from the tethered device as well.

- AR/MR functions (including SLAM/registration and pose correction) are either in the AR/MR device, or split.

- While media processing (for 2D media) can be done locally to the AR glasses, heavy AR/MR media processing may be done on the AR/MR tethered device or split.

- While such devices are likely to use significantly less processing than Type 1: 5G STAR devices by making use of the processing capabilities of the tethered device, they can still support a lot of local media and AR/MR processing. Such devices are expected to provide 8-10h of battery life while keeping a significantly low weight.

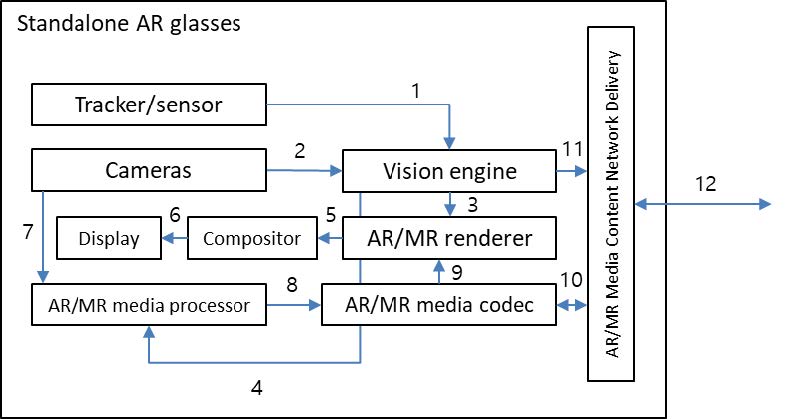

Interfaces

The following interfaces between the different functions of the device functional structure for device type #1 are defined:

Interfaces for device type #1 functional structure

Interfaces for device type #1 functional structure- Tracker/sensor output interface

- Raw outputs from various tracking and sensor device components, namely IMUs (inertial measurement unit). These outputs are typically sent as inputs to the vision engine, which performs operations such as 6DoF pose generation.

- AR/MR devices might contain the following related components:

- Accelerometer – used by the system to determine linear acceleration along the X, Y and Z axes and gravity

- Gyro – used by the system to determine rotations

- Magnetometer – used by the system to estimate absolute orientation

- Cameras output to Vision engine interface

- Depending on the device hardware, different types of camera outputs may be available as inputs into the vision engine or the camera outputs may be sent to the network. Possible camera signals include:

- Visible light environment tracking cameras – typically gray-scale cameras used by a system for head tracking and map building

- Depth cameras (ToF)– these may be short-throw (near-depth) or long-throw (far-depth), depending on the application desired (e.g. hand tracking, or spatial mapping)

- Infrared (IR cameras) – used for eye tracking and hand tracking

- World facing RGB camera – used for image processing tasks and locating the camera’s position in and perspective on the scene

- Media camera for capturing persons or objects in the scene for media data generation and consumption

- Depending on the device hardware, different types of camera outputs may be available as inputs into the vision engine or the camera outputs may be sent to the network. Possible camera signals include:

- Vision engine to AR/MR renderer interface

- The vision engine provides all the information required for the AR/MR renderer to adapt the rendering for a consistent combination of virtual content with the real world [4.3.0]. This information may be the output of vision engine processes such as spatial mapping, scene understanding, and room scan visualization. Vision engine processes are typically SLAM related, differing depending on the specific device and/or platform implementation.

- One typical output of the vision engine is the device/user pose information, which may include estimation properties depending on the service and application.

- Additional output can be 3D objects or representations for media consumption.

- Vision engine to AR/MR media processor interface

- The vision engine provides all the information required for the AR/MR media processor to perform processes such as 3D modelling. Information from the vision engine as used by the AR/MR renderer may also be sent to and used by the AR/MR media processor for relevant media processing.

- AR/MR renderer to Compositor interface

- The AR/MR rendering typically outputs a rendered 2D frame (per eye) for a given time instance according to the device/user’s current pose in his/her surrounding environment. This rendered 2D frame is sent as an input to the compositor. In the future, it can be predicted that non-2D displays will require a different output from the AR/MR renderer in order to support 3D displays e.g., Light Field 3D display.

- Compositor to Display interface

- Rendered 2D frames are composited by the compositor before being passed onto the device display. The compositor may perform certain pose correction functions depending on the overall rendering pipeline used.

- Cameras output to Media processor interface

- RGB and depth cameras are used to capture RGB/depth images and videos, which can be consumed as regular 2D or 3D images and videos, or may be used as inputs to a media processor for further media processing.

- Media processor to AR/MR media codec interface

- A media processor performs processes such as 3D modelling, in order to output uncompressed media data into the AR/MR media codec for encoding. One example of the media data at this interface is raw point cloud media data.

- AR/MR media codec to AR/MR renderer interface

- Compressed AR/MR media content intended for rendering, composition and display is decoded by the AR/MR media codec, and fed into the AR/MR renderer. Media data through this interface may be different depending on the rendering pipeline.

- AR/MR media codec to Network delivery interface

- Media contents that are captured or generated by the device (from interface 5 and 6) are encoded by the AR/MR media codec before being passed onto the network delivery entity for packetization and delivery over the 5G network. For 2D AR/MR media contents, this is typically a compressed video bitstream that is conformant to the video codec used by the AR/MR media codec.

- Media contents that are received through the network delivery interface over the 5G network are depacketized by the network delivery entity and fed into the AR/MR media codec. The subsequent decoded bitstream is handled through interfaces 7 and 4.

- Vision engine to Network delivery interface

- Certain vision engine outputs may be sent to a remote processor which exists outside the device. Such data is passed from the vision engine to the network delivery entity for packetization and delivery over the 5G network.

- Network delivery interface

- Network interface for AR/MR content delivery over the 5G network. In device type #1 for which interfaces are defined in this clause, the 5G modem exists inside the standalone AR glasses device.