Different Types of Realities

- Virtual Reality (VR) is a rendered version of a delivered visual and audio scene. The rendering is designed to mimic the visual and audio sensory stimuli of the real world as naturally as possible to an observer or user as they move within the limits defined by the application. Virtual reality usually, but not necessarily, requires a user to wear a head mounted display (HMD), to completely replace the user's field of view with a simulated visual component, and to wear headphones, to provide the user with the accompanying audio. Some form of head and motion tracking of the user in VR is usually also necessary to allow the simulated visual and audio components to be updated in order to ensure that, from the user's perspective, items and sound sources remain consistent with the user's movements. Additional means to interact with the virtual reality simulation may be provided but are not strictly necessary.

- Augmented Reality (AR) is when a user is provided with additional information or artificially generated items or content overlaid upon their current environment. Such additional information or content will usually be visual and/or audible and their observation of their current environment may be direct, with no intermediate sensing, processing and rendering, or indirect, where their perception of their environment is relayed via sensors and may be enhanced or processed.

- Mixed Reality (MR) is an advanced form of AR where some virtual elements are inserted into the physical scene with the intent to provide the illusion that these elements are part of the real scene.

- Extended reality (XR) refers to all real-and-virtual combined environments and human-machine interactions generated by computer technology and wearables. It includes representative forms such as AR, MR and VR and the areas interpolated among them. The levels of virtuality range from partially sensory inputs to fully immersive VR. A key aspect of XR is the extension of human experiences especially relating to the senses of existence (represented by VR) and the acquisition of cognition (represented by AR).

Other relevant terms:

- Immersion as the sense of being surrounded by the virtual environment

- Presence providing the feeling of being physically and spatially located in the virtual environment.

- Parallax is the relative movement of objects as a result of a change in point of view. When objects move relative to each other, users tend to estimate their size and distance.

- Occlusion is the phenomena when one object in a 3D space is blocking another object from being viewed.

Degrees of Freedom and XR Spaces

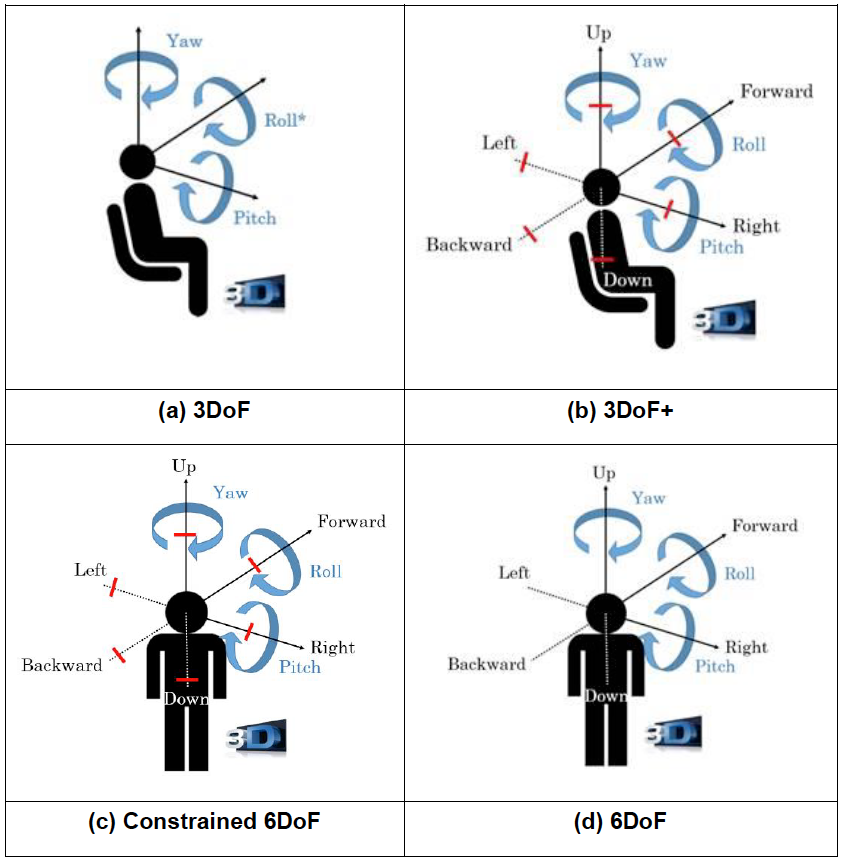

A user acts in and interacts with extended realities. Actions and interactions involve movements, gestures, body reactions. The Degrees of Freedom (DoF) describe the number of independent parameters used to define movement of a viewport in the 3D space. The following different types of Degrees-of-Freedom are described:

- 3DoF: Three rotational and un-limited movements around the X, Y and Z axes (respectively pitch, yaw and roll). A typical use case is a user sitting in a chair looking at 3D 360 VR content on an HMD.

- 3DoF+: 3DoF with additional limited translational movements (typically, head movements) along X, Y and Z axes. A typical use case is a user sitting in a chair looking at 3D 360 VR content on an HMD with the capability to slightly move his head up/down, left/right and forward/backward (see Figure 4.1-3 (b)).

- 6DoF: 3DoF with full translational movements along X, Y and Z axes. Beyond the 3DoF experience, it adds (i) moving up and down (elevating/heaving); (ii) moving left and right (strafing/swaying); and (iii) moving forward and backward (walking/surging). A typical use case is a user freely walking through 3D 360 VR content (physically or via dedicated user input means) displayed on an HMD (see Figure 4.1-3 (d)).

- Constrained 6DoF: 6DoF with constrained translational movements along X, Y and Z axes (typically, a couple of steps walking distance). A typical use case is a user freely walking through VR content (physically or via dedicated user input means) displayed on an HMD but within a constrained walking area (see Figure 4.1-3 (c)).

- Another term for Constrained 6DoF is Room Scale VR being a design paradigm for XR experiences which allows users to freely walk around a play area, with their real-life motion reflected in the XR environment.

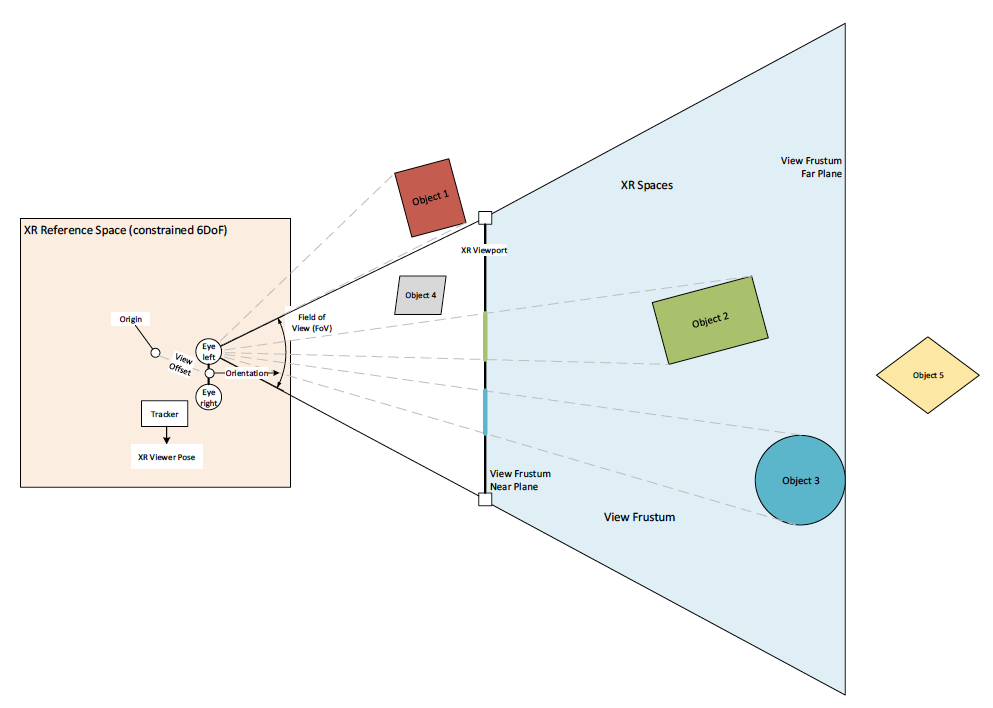

Spaces provide a relation of the user’s physical environment with other tracked entities. An XR Space represents a virtual coordinate system with an origin that corresponds to a physical location. The world coordinate system is the coordinate system in which the virtual world is created. Coordinate systems are essential for operating in 3-dimensional virtual and real worlds for XR applications. A coordinate system is expected to be a rectangular Cartesian in which all axes are equally scaled.

A position in the XR space is a 3D vector representing a position within a space and relative to the origin.

An XR reference space is one of several common XR Spaces that can be used to establish a spatial relationship with the user’s physical environment. An XR reference space may be restricted, determining the ability by the user to move. Unless the user does a reconfiguration, XR reference spaces within an XR session are static, i.e. the space the user can move in is restricted by the initial definition.

- For 3DoF, the XR reference space is limited to a single position.

- For 3DoF+, the XR reference space is limited to a small space centered around a single position, a small bounding box, limited to positions attainable with head movements only, around a single position is provided.

- For constrained 6DoF, the XR reference space has a native bounds geometry describing the border around the space, which the user can expect to safely move within. Such borders may for example be described by polygonal boundary given as an array representing a loop of points at the edges of the safe space. The points describe offsets from the origin in meters.

- For 6DoF, the XR reference space is unlimited and basically includes the whole universe.

A simplified diagram (mapped to 2D) on XR Spaces and and their relation to the scene is provided below.

An XR View describes a single view into an XR scene for a given time. Each view corresponds to a display or portion of a display used by an XR device to present the portion of the scene to the user. Rendering of the content is expected to be done to well align with the view's physical output properties, including the field of view, eye offset, and other optical properties. A view, among others, has associated:

- a view offset, describing a position and orientation of the view in the XR reference space,

- an eye describing which eye this view is expected to be shown. Displays may support stereoscopic or monoscopic viewing.

An XR Viewport describes a viewport, or a rectangular region, of a graphics surface. The XR viewport corresponds to the projection of the XR View onto a target display. An XR viewport is predominantly defined by the width and height of the rectangular dimensions of the viewport. In 3D computer graphics, the view frustum is the region of space in the modeled world that may appear on the screen, i.e. it is the field of view of a perspective virtual camera system. The planes that cut the frustum perpendicular to the viewing direction are called the near plane and the far plane. Objects closer to the camera than the near plane or beyond the far plane are not drawn. Sometimes, the far plane is placed infinitely far away from the camera so all objects within the frustum are drawn regardless of their distance from the camera.

An XR Pose describes a position and orientation in space relative to an XR Space:

- The position in the XR space is a 3D-vector representing the position within a space and relative to the origin defined by the (x,y,z) coordinates.

- The orientation in the XR space is a quaternion representing the orientation within a space and defined by a four-dimensional or homogeneous vector with (x,y,z,w) coordinates, with w being the real part of the quarternion and x, y and z the imaginary parts.

An XR Viewer Pose is an XR Pose describing the state of a viewer of the XR scene as tracked by the XR device. XR Viewer Poses are documented relative to an XR Reference Space.

The views array is a sequence of XR Views describing the viewpoints of the XR scene, relative to the XR Reference Space the XR Viewer Pose was queried with.

Tracking and XR Viewer Pose Generation

In XR applications, an essential element is the use of spatial tracking. Based on the tracking and the derived XR Viewer Pose, content is rendered to simulate a view of virtual content.

XR viewer poses and motions can be sensed by Positional Tracking, i.e. the process of tracing the XR scene coordinates of moving objects in real-time, such as HMDs or motion controller peripherals. Positional Tracking allows to derive the XR Viewer Pose, i.e. the combination of position and orientation of the viewer. Different types of tracking exist:

- Outside-In Tracking: a form of positional tracking and, generally, it is a method of optical tracking. Tracking sensors placed in a stationary location and oriented towards the tracked object that moves freely around a designated area defined by sensor coverage.

- Inside-out Tracking: a method of positional tracking commonly used in virtual reality (VR) technologies, specifically for tracking the position of head-mounted displays (HMDs) and motion controller accessories whereby the location of the cameras or other sensors that are used to determine the object's position in space are located on the device being tracked (e.g. HMD).

- World Tracking: a method to create AR experiences that allow a user to explore virtual content in the world around them with a device's back-facing camera using a device's orientation and position, and detecting real-world surfaces, as well as known images or objects.

- Simultaneous Localization and Mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of the user's location within an unknown environment

To maintain a reliable registration of the virtual world with the real world as well as to ensure accurate tracking of the XR Viewer pose, XR applications require highly accurate, low-latency tracking of the device at about 1kHz sampling frequency. An XR Viewer Pose consists of the orientation and the position. In addition, the XR Viewer Pose needs to have assigned a time stamp. The size of a XR Viewer Pose associated to time typically results in packets of size in the range of 30-100 bytes, such that the generated data is around several hundred kbit/s if delivered over the network.

XR Spatial Mapping and Localization

Spatial mapping, i.e. creating a map of the surrounding area, and localization, i.e. establishing the position of users and objects within that space, are some of the key areas of XR and in particular AR. Multiple sensor inputs are combined to get a better localization accuracy, e.g., monocular/stereo/depth cameras, radio beacons, GPS, inertial sensors, etc. Some of the methods involved are listed below:

- Spatial anchors are used for establishing the position of a 3D object in a shared AR/MR experience, independent of the individual perspective of the users. Spatial anchors are accurate within a limited space (e.g. 3m radius for the Microsoft® Mixed Reality Toolkit). Multiple anchors may be used for larger spaces.

- Simultaneous Localization and Mapping (SLAM) is used for mapping previously unknown environments, while also maintaining the localization of the device/user within that environment.

- Visual Localization, e.g., vSLAM, Visual Positioning System (VPS), etc., perform localization using visual data from, e.g., a mobile camera, combined with other sensor data.

Spatial mapping and localization can be done on the device. However, network elements can support the operations in different ways:

- Cloud services may be used for storing, retrieving and updating spatial data. For larger public spaces, crowdsourcing may be used to keep the data updated and available to all.

- A Spatial Computing Server that collects data from multiple sources and processes it to create spatial maps including, but not limited to, visual and inertial data streamed from XR devices. The service can then provide this information to other users and also assist in their localization based on the data received from them.

Indoor and outdoor mapping and localization are expected to have different requirements and limitations. Privacy concerns need to be explored by the service provider when scanning indoor spaces and storing spatial features, especially when it is linked to global positioning.

Tracking adds the concept of continuous localisation over time.